EN

This article analyzes the pattern and characteristics of AI chips at home and abroad. The author believes that in the field of AI chips, foreign chip giants occupy most of the market share, and they have absolute leading advantages in terms of talent gathering or company mergers. However, domestic AI start-up companies are presenting a chaotic situation where a hundred schools of thought are contending and each is in a separate governance; in particular, the AI chip of each start-up company has its own unique architecture and software development kit, which can neither integrate into the ecosystem established by Nvidia and Google, nor have it. The strength to contend with.

If the human-computer battle between AlphaGo and Li Shishi in March 2016 only had a significant impact on the technology and Go circles, then in May 2017, the battle against Ke Jie, the world's No. 1 Go champion, used artificial intelligence. Technology has been pushed to the public eye. AlphaGo is the first artificial intelligence program that defeats human professional Go players and the first world champion of Go. It is developed by a team led by Google's DeepMind company Damis Hassabis. Its main work The principle is "deep learning".

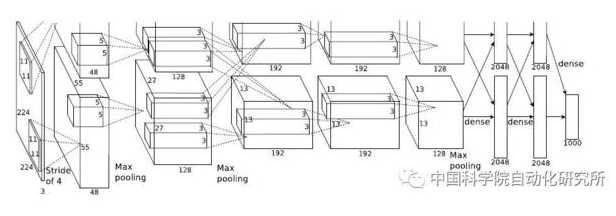

In fact, as early as 2012, deep learning technology has already aroused extensive discussion in academia. In this year’s ImageNet Large-scale Visual Recognition Challenge ILSVRC, AlexNet, a neural network structure with 5 convolutional layers and 3 fully connected layers, achieved the best historical error rate of top-5 (15.3%). The second place score is only 26.2%. Since then, neural network structures with more layers and more complex structures have emerged, such as ResNet, GoogleNet, VGGNet, and MaskRCNN, as well as the generative confrontation network GAN, which was popular last year.

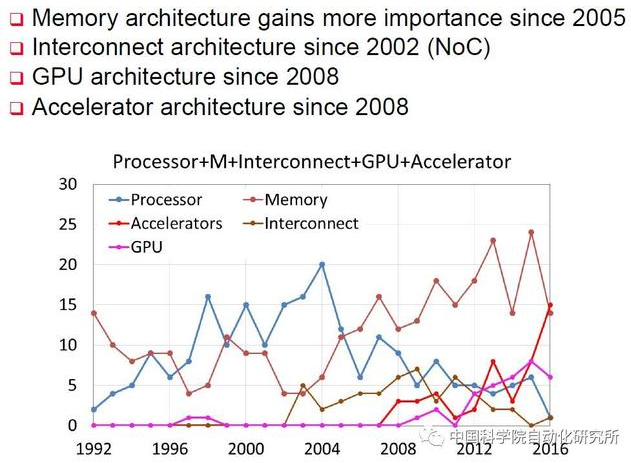

Whether it is AlexNet who won the visual recognition challenge or AlphaGo who defeated Go champion Ke Jie, their realization is inseparable from the core of modern information technology-the processor, whether this processor is a traditional CPU, or GPU, or emerging The dedicated acceleration component NNPU (NNPU is the abbreviation of Neural Network Processing Unit). There was a small seminar on Architecture 2030 at ISCA2016, the top international conference on computer architecture. Professor Xie Yuan of UCSB, a member of the Hall of Fame, summarized the papers included in ISCA since 1991. Papers related to special acceleration components are included It started in 2008 and reached its peak in 2016, surpassing the three traditional fields of processor, memory, and interconnect structure. In this year, the paper "A Neural Network Instruction Set" submitted by the research group of Chen Yunji and Chen Tianshi from the Institute of Computing Technology of the Chinese Academy of Sciences was the highest-scoring paper in ISCA2016.

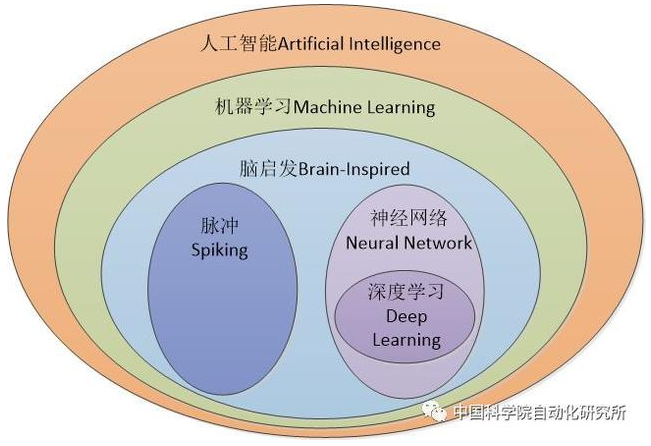

Before specifically introducing AI chips at home and abroad, some readers here may have such doubts: Are they all talking about neural networks and deep learning? Then I think it is necessary to explain the concepts of artificial intelligence and neural networks. , Especially in the "Three-year Action Plan for Promoting the Development of the New Generation of Artificial Intelligence Industry (2018-2020)" issued by the Ministry of Industry and Information Technology in 2017, the description of development goals makes it easy to think that artificial intelligence is a neural network, and AI chips are neural networks. chip.

The overall core basic capabilities of artificial intelligence have been significantly enhanced, smart sensor technology products have achieved breakthroughs, design, foundry, and packaging and testing technologies have reached international standards, neural network chips have achieved mass production and large-scale applications in key areas, and open source development platforms have initially supported industries Ability to develop rapidly.

is not the case. Artificial intelligence is a very old and very old concept, and neural networks are just a subset of the category of artificial intelligence. As early as 1956, Turing Award winner John McCarthy, known as the "Father of Artificial Intelligence", defined artificial intelligence as the science and engineering of creating intelligent machines. In 1959, Arthur Samuel gave the definition of machine learning in a subfield of artificial intelligence, that is, "computers have the ability to learn, not through pre-accurately implemented codes." This is currently recognized as the earliest and most accurate for machine learning. Definition. The neural networks and deep learning that we know every day belong to the category of machine learning, and they are all inspired by brain mechanisms and developed. Another more important research field is the Spike Neural Network. The representative units and enterprises in China are the Brain-inspired Computing Research Center of Tsinghua University and Shanghai Xijing Technology.

Okay, now I can finally introduce the development status of AI chips at home and abroad. Of course, these are my personal observations and humble opinions.

RELATED NEWS